In recent years, the use of artificial intelligence (AI) or machine learning (ML) has made huge leaps in research. Now there is hardly any doubt that this new IT field will change many aspects of our lives in the next decade. And video and film editing is not excluded from this either.

Already today, all major manufacturers have integrated AI engines into their editing, compositing and grading systems. However, as proprietary solutions, which are permanently compiled and available in the form of special effects. The Foundry has now opened a more radical approach with Nuke and provides an open client/server interface for machine learning projects.

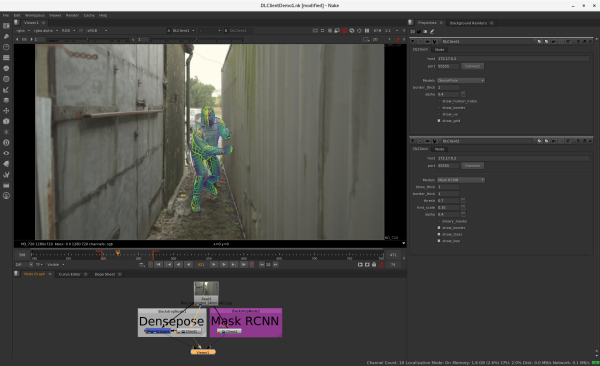

The plugin sends as client frames as well as additional parameters to a configurable server, where the frames are accepted via Python and can be fed into common AI frameworks like Tensorflow or PyTorch. On such a server GPUs can execute the computational learning algorithms massively (and parallel) accelerated. The calculated frames are then sent back to Nuke. The plugin in Nuke behaves like a normal effect node for the user.

This now makes it possible to try out very current AI research results directly in Nuke with relatively little coding effort. The Foundy wouldn't mind if their server became the platform for AI projects in the video and film world. Developers would not always have to reinvent the wheel to feed the networks and Nuke users would have direct access to current AI research results.

With the new plugin you can already try out a DeBlur algorithm, a pose recognizer or an automatic masking tool. Since the ML frame server architecture  made available as an open source project, it would also be conceivable that Adobe or Blackmagic would integrate the same interface into their applications. In any case, we would extremely welcome it if a similar idea were not implemented twice in other standards...

made available as an open source project, it would also be conceivable that Adobe or Blackmagic would integrate the same interface into their applications. In any case, we would extremely welcome it if a similar idea were not implemented twice in other standards...