[16:10 Sun,27.September 2020 by Thomas Richter] |

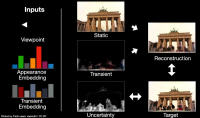

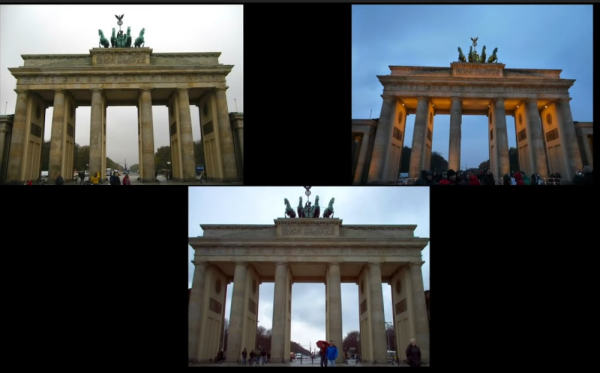

The new NeRF-W algorithm, christened NeRF-W by a team of Google researchers, uses a neural network of multiple photos of a scene to create a model that allows new images of the scene to be synthesized from additional, previously unavailable perspectives and even produces virtual camera movements. This is demonstrated very nicely in the following clip using the example of the Brandenburger Tor and the Fontana di Trevi, among others: A challenge for the Neural Radiance Field (NeRF) based algorithm is the extremely different light and weather conditions of the different photos and other dynamic differences between the shooting situations, such as visual obstructions like cars, people or signs, from which it must abstract in order to obtain a 3D model of the static objects including the prevailing lighting conditions of a scene.  Input photos with different lighting conditions This allows both camera pans and zooms. In addition, the model allows to change the look of the new virtual views, for example to simulate the special lighting conditions of a certain light situation (midday, night with headlights).  Generated Output The method naturally has difficulties with those parts of a scene that only appear in a few photos because they tend to be in the background and are only visible from certain perspectives. The nice thing about the new method is that you can use any photo from the net, which was taken by different users with different cameras. The training using 8 GPUs for a scene with several hundred photos took about 2 days. §PIC3:NeRF-W Algorithm The "2 Minute Papoers" clip beautifully demonstrates the great progress that the new method has achieved within only a few months compared to the We remember a Bild zur Newsmeldung:

deutsche Version dieser Seite: KI generiert virtuelle Kamerafahrten aus Photos |