[09:57 Sat,9.April 2022 by Thomas Richter] |

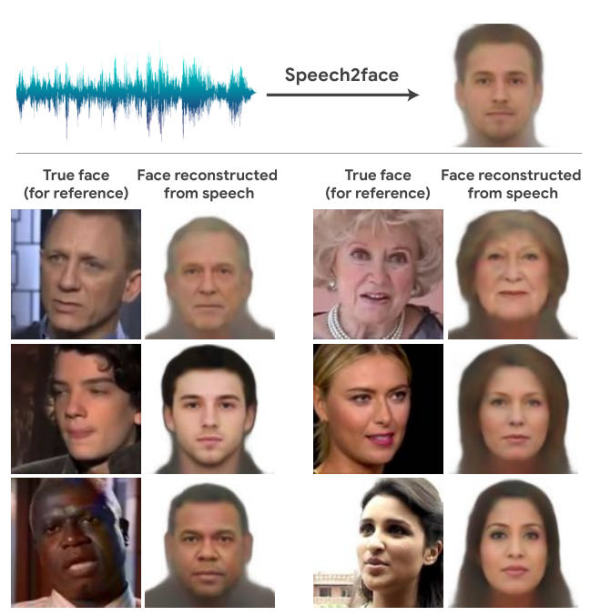

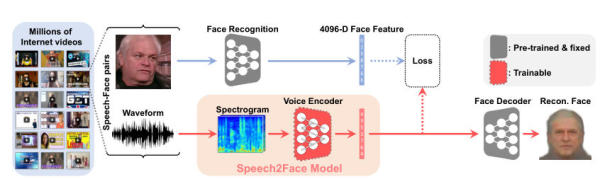

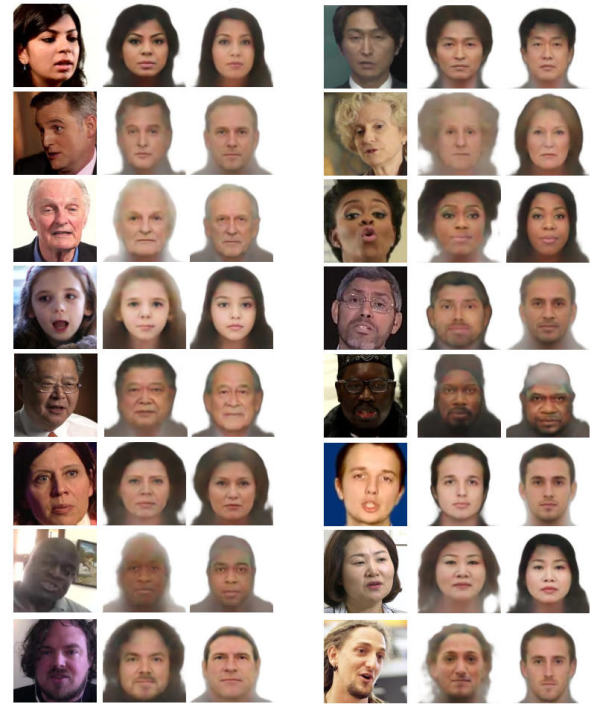

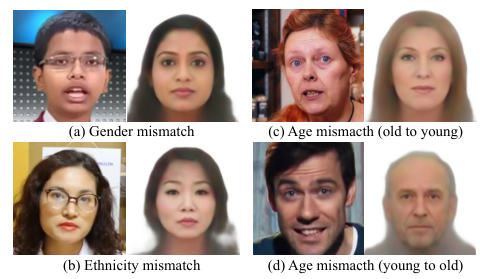

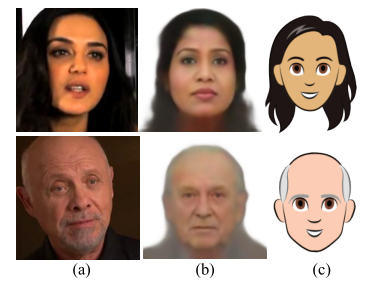

We were made aware of the extremely interesting AI project "Speech2Face" by an article on  Speech2Face The deep neural network was trained on millions of videos showing people talking to each other - the faces appearing in the videos were recognized and the corresponding voices were analyzed by spectrogram. Based on these spectrograms, a face matching a particular voice is then selected during the search. In most cases, the longer the voice sample, the greater the similarity of the face (6 seconds yields significantly better results than 3 second samples. Thus, the Deep Learning algorithm independently learned correlations between the sound of voices and the appearance of the speaker. Based on this, the algorithm then estimates the speaker&s age, gender, and other features and generates a matching face.  Speech2Face algorithm To further assess the performance of the AI and compare the real face with the generated one, a standardized image of the face from the frontal view with identical lighting of a speaking person was also synthesized from the videos. And here, too, an often astonishing similarity of the real faces with the faces generated via Speech2Face can be seen, far beyond the matching age and gender.  Speech2Face However, there were also a number of cases where generated face differed greatly from the original face of the speaker in terms of age, gender or ethnicity. In the latter case, especially when a person does not speak in one of the languages of the respective (apparent) ethnicity.  Speech2Face problems The researchers themselves therefore also caveat that although their model reveals statistical correlations between facial features and voices of speakers in the training data, it does not represent the entire world population due to the training data used (mainly a collection of educational videos from YouTube) and the model is influenced by this uneven distribution of the data. Therefore, they recommend that any practical application of the method use training data representative of the intended user population. Use cases would be, for example, the automatic generation of avatars matching a voice (even stylized as a cartoon) in cases of online conversations where only the sound is available. Likewise, computer-generated voices of virtual assistants, for example, could be given a face via Speech2Face. Just as well, however, a phantom image of an extortionist could be created in the context of police investigations, for example, of which only a voice recording exists.  Speech2Face cartoon faces We are not aware of any further development of the Speech2Face algorithm, but if one is published it will probably be much better than the now more than 2 years "old" method due to the enormous progress in DeepLearning. As is often the case with DeepLearning algorithms, there is a danger that the algorithm&s "estimate" based on a lot of training data - as good as it usually is - will be taken for true without question. It is similar with deutsche Version dieser Seite: KI generiert erschreckend exakte Portraits - nur anhand der Stimme |