[09:37 Sun,26.May 2019 by Thomas Richter] |

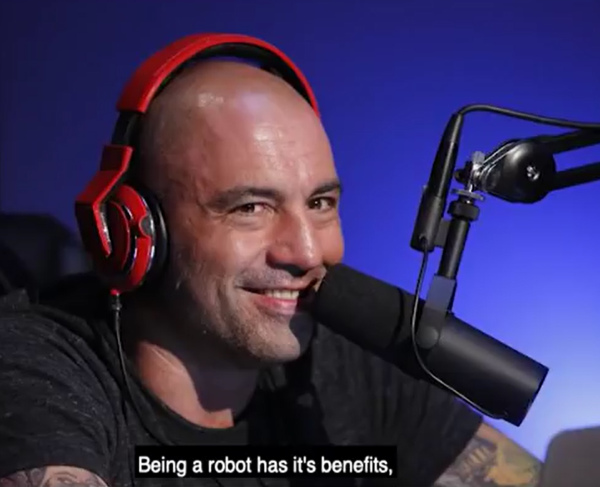

Dessa, a company specializing in AI, has introduced a new speech synthesis that - at least in the samples provided - can hardly be distinguished from a real voice. This is demonstrated by the voice of Joe Rogan, a well-known stand-up comedian, commentator and podcast producer in the USA, in the form of a Youtube video with speech examples of the synthesized voice and his own Joe Rogan&s voice was probably a particularly good demo example because his 1,300 podcasts, among other things, provided an enormous amount of training material - a prerequisite for machine learning algorithms. How good a voice sounds, for which less training material is available, will be shown. If the quality of the simulated synthetic voice is reliably as good as in the examples, then many very useful but of course also shady applications will soon be conceivable. Because - analogous to the right to one&s own image - there is no right to one&s own voice, only a right to sound recordings of one&s own voice as part of the general right of personality. Recording using a simulated voice is not affected by this. In the field of film, the possibility of using the voices of real actors to replace dialogues in another language by dubbing would of course be groundbreaking. It would of course be important to know how good the voice simulation sounds in another language. Ideally, lip-sync should be done automatically via Deep Learning. Other possible applications would be within the framework of existing synthetic speech output functions. These could be made much more vivid if the voice of a known personality or a friend is simulated - for example, when reading books. Likewise the personal digital assistant like Siri or Alexa could speak memories of appointments with the own voice, in order to be heard. A fitness app, for example, could give instructions with Arnold Schwarzenegger&s voice. A wonderful application is for people who have lost their voice due to an illness (such as people with ALS) - provided of course there is old training material with the voice. They could then use their own voice to talk to others via text input. Finding food is such a voice simulation of course also for the banal use of creative internet memes. Dassa himself also gives some examples for the abuse possibilities of such voice simulations - so voice recordings could be falsified at will, for example to discredit someone. Together with the use of  It would be interesting - both for the positive and negative application examples - to know how much training material the algorithm needs to simulate a voice deceptively realistically. And in order to be really convincing for someone who is familiar with the respective speaker, peculiarities in expression and the choice of words would have to be copied. At the moment there is still some know-how, computing power and data needed, but in a few years (or even shorter time) the technology will evolve in such a way that only a few seconds of audio will be needed to create a lifelike replica of each existing voice. And especially in the field of deep learning based technologies, which do not use highly specialized algorithms that can be protected by copyright, it doesn&t take long until a new technology can be imitated in similar or even better quality. deutsche Version dieser Seite: AI simuliert menschliche Stimmen täuschend echt |