The AI video generator Runway Gen-2 has received an update (which, as always, is undefined) that seems to be quite something - the user community is delighted with the jump in quality of the clips generated. Several videos have already been posted on X-Twitter (twitter.com/_Borriss_/status/1720316126390092177) which were created after the update and which are largely on a completely different level to much of what was previously seen.

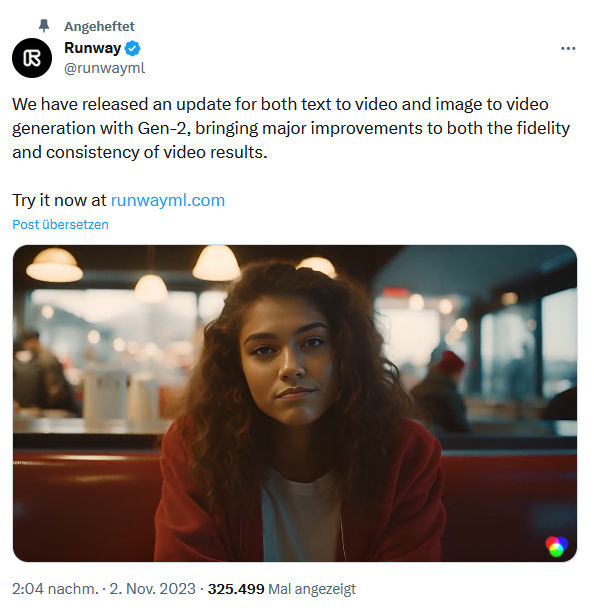

The image sequences are now more coherent thanks to better - albeit not perfect - temporal coherence, there is less wobbling than before and the motifs look more realistic. However, humans still can't walk or move realistically, and there are still anatomical glitches, for example when blinking. Also, parts of the picture that should be still tend to flicker, other areas seem to morph slightly or similar.

The material rarely looks filmed, but mostly looks like very high-quality and correspondingly elaborate computer animations, but - and this is the really remarkable thing - was created in minutes from text prompts and/or an image template. For animated film, these increasingly enhanced possibilities are already turning things upside down, not only in terms of workflows, but we will certainly see these dreamy/dreamed-up looks be adopted as a new visual language.