[13:16 Thu,9.March 2023 by Thomas Richter] |

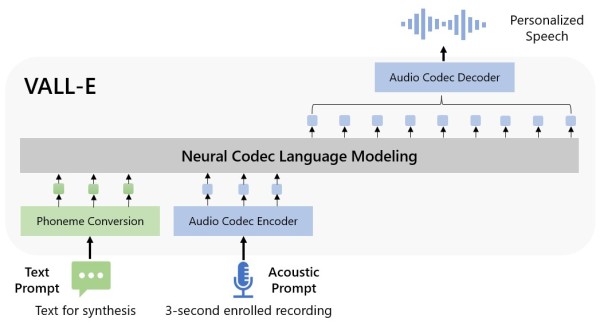

It has been feared for some time - but now the time has come: the first telephone tricksters are using voice AIs to get old people to transfer money to the scammers by pretending that one of their children or grandchildren is in an emergency. Of course, this works much better with a voice that really sounds like that of the (grand)child - and that is exactly what is possible with modern voice AIs that can deceptively imitate any voice. Only  The Which AI the scammers used is not known, but there are several online services such as Telephone banking adé?Also troubling is Until now, a voice sample was considered to be an extremely secure means of identification - statements such as those made by  OpenAI VALL-E Voice AI. Such a Voice ID algorithm, which can analyze and verify a voice, is now outwitted by a voice AI, which can equally well decode voices with all their characteristics, but can also deceptively simulate the voice on the basis of this data. No case is yet known in which phone banking by voice AI has actually been hacked, but technologically, authentication by voice (at least without additional strong security measures) is now obsolete. In the future.Using such simulated voices, much more targeted scams are of course possible with a little more effort - for example, a company&s accounting department could be tricked into transferring larger sums of money by the boss calling them, or similar. There are no limits to creativity when it comes to the possible applications of voice fakes on a large or small scale - especially if money can be earned or a person can be discredited. We will probably see a lot more "interesting" in this direction. A first warning was the Protection against imitation of one&s own voice?For the very near future, this development means that mere voices will no longer be sufficient to identify a person. To avoid having one&s voice imitated, one would have to try not to publicly leave any of one&s own voice recordings that could be used for voice sampling. But that would mean not posting any video clips of speaking on the web at all, or preventing such recordings from being made by someone else - an impossible undertaking in many cases. Many people&s (video) and voice recordings can already be found on the web and can no longer be deleted - so the child has already fallen into the well. And since even a few seconds / a few words of voice recordings are enough, even that might not be enough - just a telephone conversation, in which one answers the caller with a few sentences, could produce enough voice material for simulation.  Distrust every voice?This means that from now on one should distrust any audio recording of a voice - or even an exchange of words in real time - if what is said in any way suggests fraud or is unusual, unless the call actually comes from the phone number matching the person or can be otherwise authenticated. Paranoid distrust as a basic attitude - an unattractive prospect. And in the social context, of course, this also applies: from now on, no pure audio recording of a known personality is to be trusted unless its authenticity can be clearly verified. deutsche Version dieser Seite: KIs imitieren Stimmen perfekt - Vorsicht vor anrollenden Betrugswellen |