[16:09 Fri,24.March 2023 by blip] |

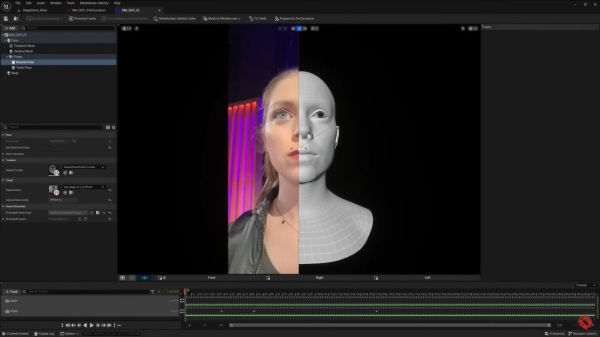

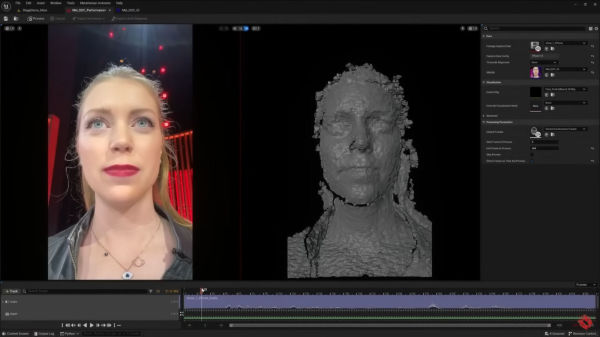

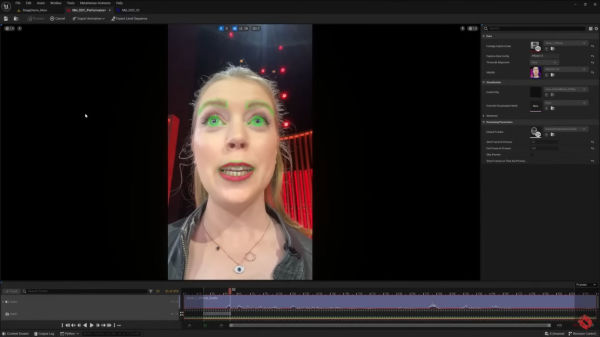

There&s a lot happening in the motion/performance capturing space right now - the latest news comes from Epic Games. For their  MetaHuman Animator Face Capturing via iPhone With a simple iPhone camera - i.e. without 3D setup or markers - it will soon be possible to perform face capturing within a few minutes and transfer it to a rigged "MetaHuman"; for the latter, of course, you will need sufficiently powerful hardware. If you want, you can still use a helmet camera and possibly achieve even more accurate results. The following presentation shows how well this works - even fine nuances of facial expressions are transferred well, even though it is of course still recognizable that it is an animation. In order for the system to apply facial expressions as faithfully as possible to any avatar, the face to be tracked must first be analyzed. First, a MetaHuman actor identity is created, with a standardized rig and mesh - only a short recorded sequence is required. With the help of this character, the position changes of the rig during the tracked performance can be detected and transferred to the target MetaHuman character.  MetaHuman actor identity  Capturing performance  Since MetaHuman Animator also supports timecode, performance capturing of a face can be easily combined with body capturing. It is also supposed to realistically imitate tongue movements when speaking by using audio data. You can already guess: Deep Learning algorithms are at work here again, of course. The new MetaHuman Animator features will be part of the MetaHuman plugin for the Unreal Engine, which (like the engine itself) can be downloaded for free. However, it won&t be publicly available for a few months. MetaHuman Animator works with an iPhone 11 or later, also required is the free Live Link Face app for iOS, which will be updated with some additional capture modes to support this workflow. By the way, an iPhone has recently been sufficient for simple motion capturing as well, or more precisely it has to be two phones. The matching app deutsche Version dieser Seite: Gesichter einfach per iPhone animieren: MetaHuman Animator für Unreal Engine vorgestellt |