[15:07 Thu,8.February 2024 by Rudi Schmidts] |

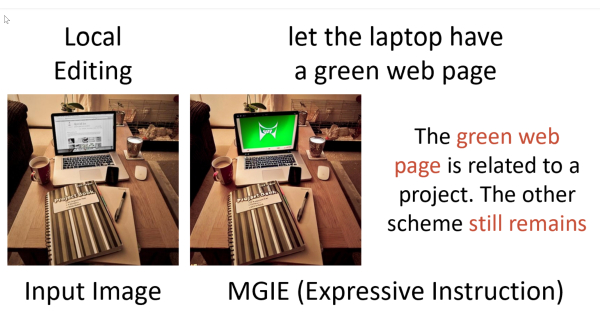

Together with the University of California in Santa Barbara, Apple has developed its own AI model that apparently combines a language and a diffusion model to enable simple image manipulation for everyone. The related paper MGIE is not the first project of its kind and, with a little know-how, you can easily use Stable Diffusion or Adobe&s Firefly to solve such tasks. However, it remains to be seen what the quality of MGIE&s output will be. In any case, the paper itself sees Photoshop-like tasks as the intended use. What is exciting here is how strongly the unchanged area of an image is influenced. This is because current AI models usually change more here than the user would like. If you take a closer look at the following demo modification, you will quickly understand what we mean, because it is still full of typical AI artefacts:  Apple presents its own AI model for image processing as open source - MGIE And even if other sites may see it differently, we also believe that it is basically a bad sign if Apple releases the code for such a tool. Because then it is rather unlikely that it will find its way into an upcoming product. But of course, don&t let the day go before the evening. We will certainly try to get a more concrete picture of MGIE - as soon as the current rush on the HuggingFace demo page has died down a little. deutsche Version dieser Seite: Apple zeigt eigenes KI-Modell für Bildbearbeitung als Open Source - MGIE |