Tonight Stability AI announced version 2 of its groundbreaking AI imaging tool Stable Diffusion - still under an OpenSource licence. Incidentally, the tool also has local roots: a team including Robin Rombach (Stability AI) and Patrick Esser (Runway ML) from the CompVis group at LMU Munich, led by Prof. Dr. Björn Ommer, led the original Stable Diffusion V1 release.

Stable Diffusion 2.0 now offers a number of important improvements and features compared to the original V1 release:

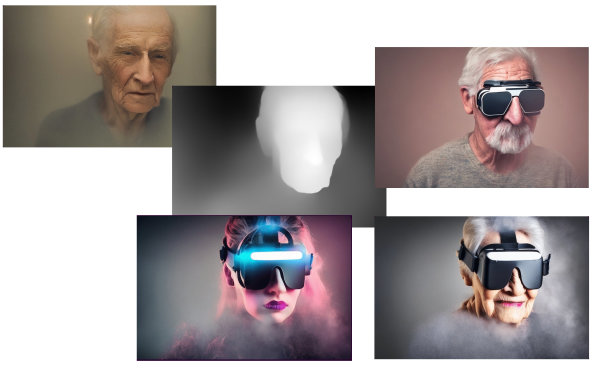

Not a photo, but a digital fantasy of Stable Diffusion 2.

The new version includes more robust text-to-image models trained with a brand new text encoder (OpenCLIP), which is said to significantly improve the quality of generated images compared to previous V1 versions. The text-to-image models in this version can generate images with standard resolutions of 512x512 pixels and 768x768 pixels. Stable Diffusion 2.0 includes an upscaler diffusion model that increases the resolution of images by a factor of 4. In combination with the text-to-image models, Stable Diffusion 2.0 can thus generate images with a resolution of 2048x2048 and higher.

Thanks to the integrated upscaler, you also get results in high resolutions.

The depth generator Depth2img sounds really exciting. This estimates the depth (i.e. the Z-buffer of an input image and then generates new images that use both the text and the depth information. Depth-to-image should allow all sorts of new creative applications to be realised, but with the coherence and depth of the image preserved.

Depth2img can estimate depth information and transfer it to other images

By the way, adult content will still be suppressed with the NSFW filter before output.

In the next few days, the new models should be integrated into the Stability AI API Platform (platform.stability.ai) and DreamStudio. We are excited to see what will come with the new tools...