[11:20 Sun,4.May 2025 by Rudi Schmidts] |

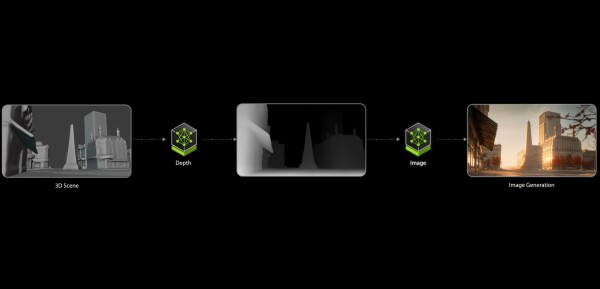

AI-powered image generation is evolving rapidly, but one of the biggest challenges remains creative control. The state of the art remains, for the time being, the creation of scenes with text (so-called prompting). A second difficulty often lies in building a workflow that ultimately brings together the input and output of the model. For this, one often uses a node structure and an interface with which the individual functions can be easily linked visually. ComfyUI is one of the most used programs from the open source community, but the not entirely trivial setup deters many users.  Nvidia wants to jump into this breach with its NIM microservices and blueprints. NIMs are curated and optimized AI models from Nvidia that are easy to install and link. Such NIM microservices can be, for example, a Large Language Model, but also an AI voice output. Most of the time, the NIM services are based on open source models. A current overview can be found here, among other places: build.nvidia.com/models. At the same time, Nvidia also develops and distributes so-called AI Blueprints. These are example workflows that link NIMs to a usable application. Such a blueprint for 3D-guided generative AI now controls image generation by using a 3D scene design in Blender to provide the image generator (FLUX.1-dev from Black Forest Labs) with a depth map, which, together with a user input, then generates the desired images. In practice, the application looks like this: The depth map helps the image model understand where things should be placed. The advantage of this technique is that no highly detailed objects or high-quality textures are required, as these are converted to grayscale anyway. Because the scenes are in 3D, users can easily move objects and change camera angles. Of course, a basic understanding of how to use Blender is necessary for this. For those who are technically interested: Behind the blueprint is ComfyUI, which is particularly easy to install. The FLUX.1-dev model, which has been optimized for GeForce RTX graphics processors, is responsible for the graphics output as a NIM microservice. The AI blueprint for 3D-guided generative AI requires at least an NVIDIA GeForce RTX 4080 graphics card. deutsche Version dieser Seite: Blueprints und NIMs - Nvidia zeigt "3D-Guided Generative AI" für RTX GPUs |