[10:24 Mon,2.October 2023 by Rudi Schmidts] |

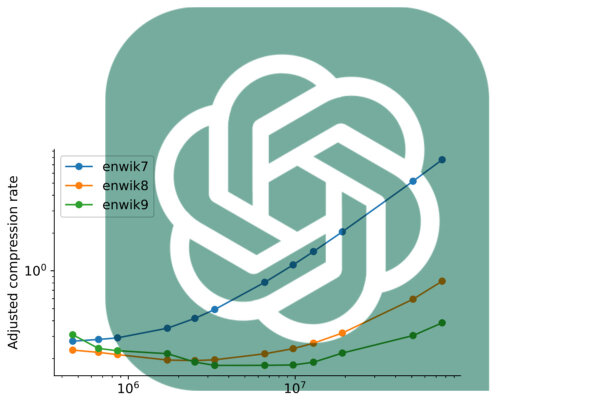

What actually happens when you compress an image losslessly? To compress data, you have to find recurring patterns in the data. Then you can combine them to save memory. For example, instead of 10110 10110 10110, you write 3 x 10110. This usually saves quite a bit of storage space.  Pattern recognition and guessing how a sequence of data will evolve thus connects the two worlds. But can large language models and effective, lossless image compression really have much to do with each other in practice? In the arXiv research paper titled The really strange thing about the surprisingly good compression results, however, is that Chinchilla 70B was trained primarily to handle text - and yet is now surprisingly effective at compressing other types of data. In the two cases considered, even better than algorithms designed specifically for these tasks. This probably establishes that AI models will play a bigger role in image and audio compression in the future. But there are, of course, a few critical comments about this report, which is making big waves, especially in IT and AI circles. First, the paper has not yet been peer-reviewed, which is why an error could have crept in. It is conceivable that Chinchilla 70B somehow had access to the ImageNet image database and the LibriSpeech audio dataset during its training. And thus already knew the data through its own training. In addition, one should not lose sight of the size of the "decoder". To decompress a PNG file, a very small program with a few KB of code is usually sufficient, while a Chinchilla 70B model needs several high-performance GPUs connected in parallel and hundreds of GB of GPU RAM as a decoder. So such AI compressors are by no means efficient in terms of memory consumption or computing power. And they probably won&t be in the foreseeable future. deutsche Version dieser Seite: Kann Chat GPT Bilder besser verlustfrei komprimieren als PNG? |