[09:12 Wed,14.February 2024 by Rudi Schmidts] |

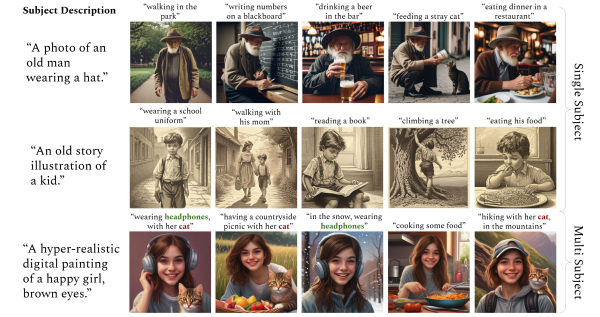

Although the project website still says "anonymous authors", the arxiv.org/pdf/2402.03286.pdf (linked PDF paper) makes it clear that "ConsiStory" originates from Nvidia&s research facilities. This project addresses the problem that it is often difficult to use one or more characters consistently over several image generations. For example, an "old man with a hat" usually looks significantly different with each generation attempt, depending on the other prompt tokens. This is known as the current consistency problem of generative AI. With ConsiStory, on the other hand, it should now be possible to generate consistent motifs across a series of images within Stable Diffusion XL (SDXL) without additional training. The researchers at Nvidia use a new feature for this, which they call "correspondence-based feature injection". ConsiStory should even be able to be extended to multi-subject scenarios and enable training-free personalization for common objects.  ConsiStory allows the use of consistent characters without fine-tuning The lack of training means that such images can be created on a single Nvidia H100 in just ten seconds - which, according to the paper, is around twenty times faster than previous state-of-the-art methods. Judging by the quality of the results published so far, Nvidia is likely to have achieved a small milestone in generative AI research here - because consistency in characters is one of the major problems currently "hanging" over many practical application scenarios for generative AI. And, of course, some rather unwanted AI projects, such as fully automated, virtual AI influencers. Self-usable code to try out ConsiStory will be made available "shortly" on the deutsche Version dieser Seite: ConsiStory in Stable Diffusion - Endlich konsistente KI-Charaktere ohne Finetuning? |