[17:22 Sun,10.April 2022 by Thomas Richter] |

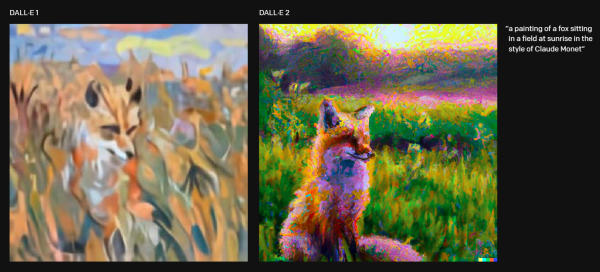

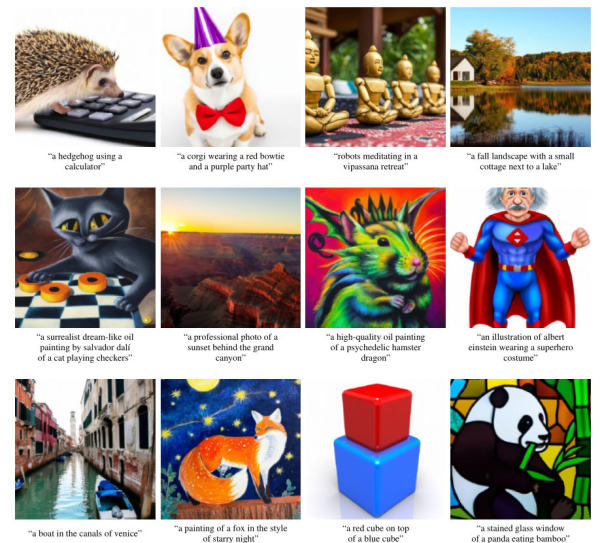

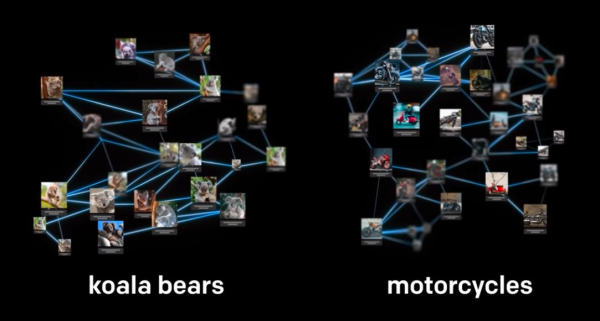

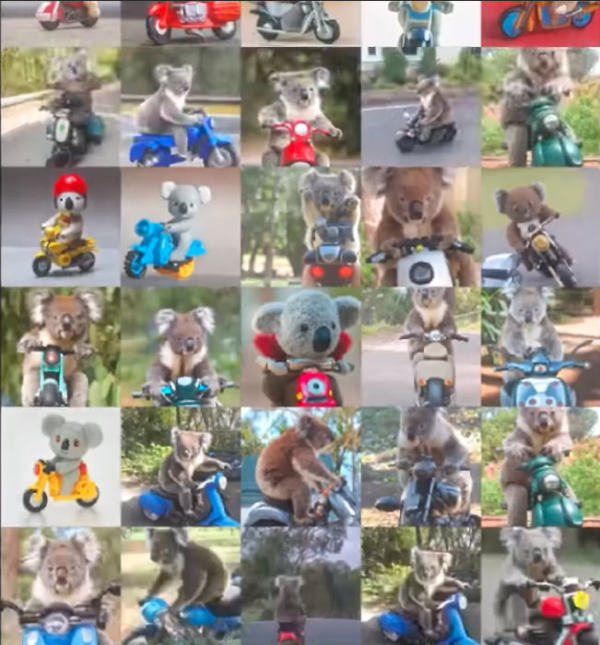

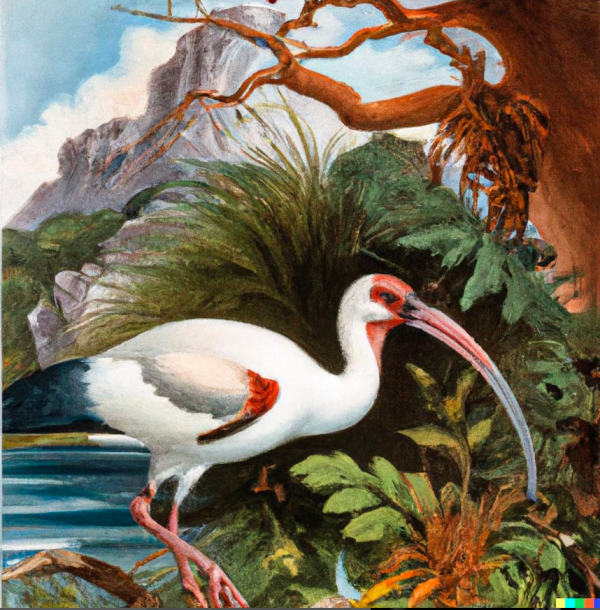

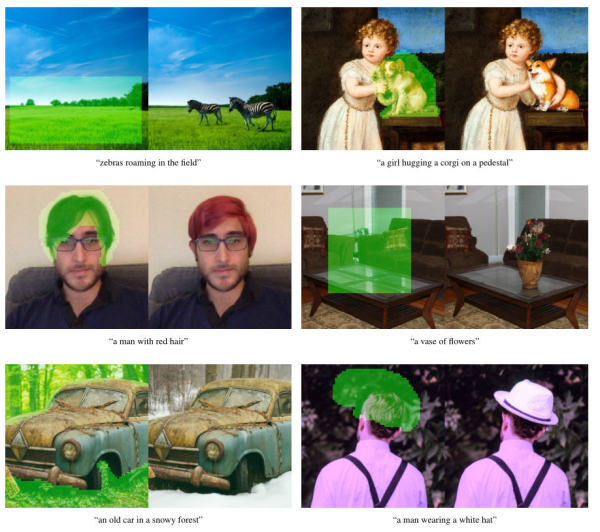

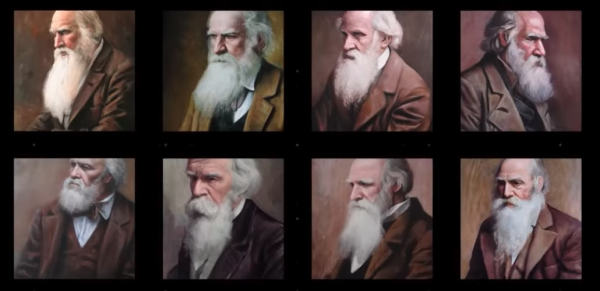

A little over a year ago, OpenAI had (launched) the first version of DALL-E www.slashcam.de/news/single/KI-erfindet-Bilder-nach-Textbeschreibung---DALL-E-16275.html, a neural network that can generate images using only simple text descriptions. Now the latest version has been introduced, which has numerous improvements.  DALL-E 1 and 2 in comparison For example, DALL-E 2 not only generates better and also faster images, but also with a four times higher resolution (1,024 x 1,024) than before. And for the first time, images can not only be generated, but also edited specifically by text - individual image elements can now be both added and removed.  Some examples DALL-E 2 (a portemanteau of Salvador DALI and WALL-E) was trained using images including image descriptions. In addition, the neural network learned the relationship between images and the text describing them. For those interested in the technical details, How does DALL-E 2 work? ExamplesThe input is a description of what should be in the image to be generated - not only objects that should appear in the image can be named, but also relations to other objects or even actions, so for example "a hedgehog operating a calculator" or "rabbit detective sitting on a park bench reading a newspaper in a Victorian setting".  Pictures of koalas and motorcycles  Many example pictures of koalas riding motorcycles The style of the picture can also be defined, because DALL-E 2 can reproduce photorealistic pictures as well as cartoons or abstract painting styles (like psychedelic, wax crayon or oil painting) or styles of famous painters (like Dali, Monet, Picasso and others).  An elephant tea party on a grass lawn More beautiful examples created by DALL-E 2 at the prompt of users can be found at ttps://twitter.com/sama/status/1511724264629678084 (Twitter). Illustrator jobs at risk?The results are amazing - after all, there are no limits to the imagination. Potentially any image can be created that one can think up and describe, no matter how fantastic or absurd the motif. It is already feared - should the algorithm be generally accessible and the image quality still somewhat better - that illustrators could lose their jobs, since also completely special picture motives can be produced completely simply after free description with DALL-E 2 in seconds - together with an immense number of picture alternatives to the selection.  An ibis in the wild, painted in the style of John Audubon Even more examples can be found at Change images via DALL-E 2.The new possibility of the subsequent editing ("Inpainting") of pictures by DALL-E 2 works quite simply - thus first roughly an image area is marked, at which the change is to be accomplished and then by text described which is to be changed. For example, a hat can be put on a person, an object can be added or removed, or the background can be changed.  The special thing about this is that shadows, reflections and textures of the objects and the background are automatically taken into account during these operations, and the style (whether photorealistic or alienated) of the object is adapted to that of the image.  A corgi in a museum - inserted in different places DALL-E 2 can also generate variations of an image.  Variations Dangers.The OpenAI team has restricted direct access to DALL-E 2&s API, i.e. the ability for (potentially) anyone to freely generate images with it, and has only released it to a small group of selected users. The possibilities of misuse are considered too dangerous - be it pornographic or violent images. §PIC6:A photo of an astronaut riding a horse For this reason, the researchers have made an effort to filter out sexual and violent content from the training data for DALL-E 2. In addition, filters for text input and uploaded images are intended to ward off abuse of the system. Similarly, the training process has been modified to limit the ability of the DALL-E 2 model to remember faces from the training data to prevent the model from faithfully reproducing images of celebrities and other public figures. ProblemsHowever, if you take a closer look at the generated images, you will sometimes notice a number of image errors that are not so easy to spot from a distance: some parts of an object are often somewhat strangely distorted and indistinct.  A rabbit detective sitting on a park bench and reading a newspaper in a victorian setting  Detail from rabbit detective image with distortions Similarly, DALL-E 2 cannot represent objects it does not know i.e. with which it has not been trained. Goals.The team at OpenAI hopes. that DALL E 2 will also help understand how advanced AI systems see and understand our world. This is important because DeepLearning systems are a kind of black box, since they are self-learning and it is often impossible to understand what exactly has been learned. Using DALL-E 2 the images it generates - and also the failed ones - there is the possibility to gain some deeper insights into the inner workings of the neural network. The new version of DALL E confirms us in our already for the first generation made Bild zur Newsmeldung:

deutsche Version dieser Seite: DALL-E 2: KI generiert und editiert Bilder nur anhand von Textbeschreibung |