[10:14 Sat,10.February 2018 by Thomas Richter] |

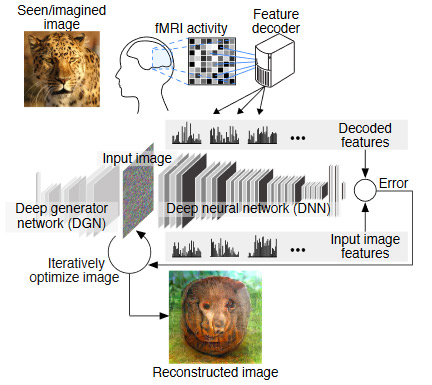

Scientists from the ATR Computational Neuroscience Laboratories and Kyoto University have developed a Deep Learning based algorithm, which is able to produce roughly shadowy images of what he is currently seeing by measuring the brain activity of a human being.  Example pictures The special feature: the trained neural network (DNN - Deep Neural Network) is not only able to reconstruct halfway images that a person can see at the moment, but also shapes that he only imagines. Due to its different layers, the DNN represents a structural similarity with the hierarchical processing of the (in this case visual) sensory stimulus in the human brain, which is used to adjust the image to the one presented. To calculate the DNN, a Nvidia GTX 1080Ti and a Tesla K80 GPU specialized in machine-learning were used. The neural network was trained on the basis of 1000 photos, which were shown to 3 participants in a magnetic resonance tomograph over a period of 10 months.  Functionality The still very blurred results can be improved in the future by using higher resolution imaging techniques, even more training and changing the algorithm. If the image quality were to become even better, this would be a technology that really seemed to come from a science fiction film: only with imagination can images be drawn, models be designed or visual memories be shown to other people. Of course, this would also require a somewhat more restrictive format of the required magnetic resonance tomograph or another technology for exact measurement of brain activity. deutsche Version dieser Seite: Deep Learning Algorithmus kann Bilder im Kopf lesen |