After Open AI has just dominated the AI news with Dall-E 3, Meta is taking its next strike in the league of generative diffusion models. Although "Emu" has not yet been officially announced, there is already a submitted paper, which is now arousing great curiosity in the scene.

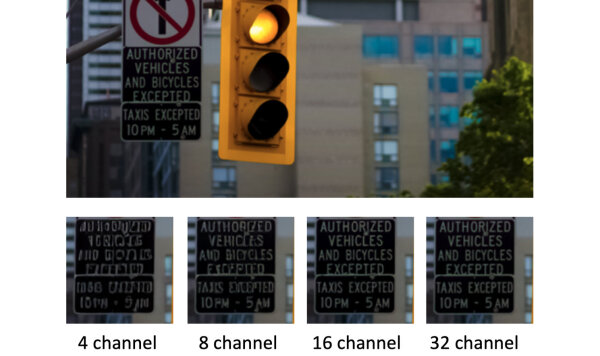

The paper ultimately introduces two very interesting novelties with Emu. First, Meta found that increasing the number of channels in the autoencoder from 4 to 16 significantly increased the reconstruction of fine details. Small fonts, for example, remain clearly legible with this.

Meta's emu uses 16 channels in the autoencoder and thus obtains more details.

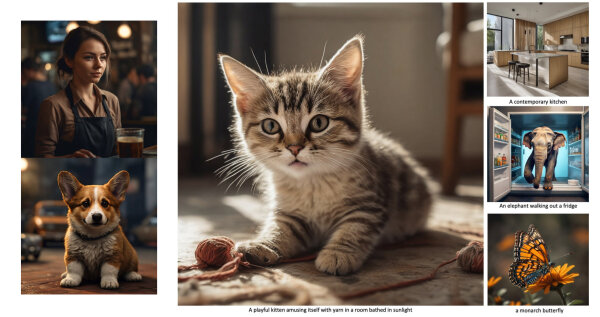

According to Meta, however, the most important new insight provided by Emu is that supervised fine-tuning with a set of surprisingly small but extremely visually appealing images can significantly improve generation quality.

Thus, Emu is a fairly standard latent diffusion model that was trained using 1.1 billion image-text pairs. However, the subsequent fine-tuning was done with only "a few thousand" images. These, however, had been carefully selected for their "aesthetic excellence." With this "class not mass" strategy, the model outperformed itself without fine-tuning 82.9 percent of the time, according to an Open User assessment. Even compared to Stable Diffusion XL, users preferred Emu's results in more than 2 out of 3 cases in a web test.

Metas Emu - Generative Image Generator with Aesthetically Curated Fine Tuning

Now, of course, it remains exciting to see what Meta will ultimately do with its model. Meta's last major language model (LLMs) (LLama) quickly found its way into the hands of the open source community - which of course now raises corresponding expectations towards Emu. However, we will have to wait a few more days until Meta will announce its concrete plans here.