[11:03 Sat,11.March 2023 by Rudi Schmidts] |

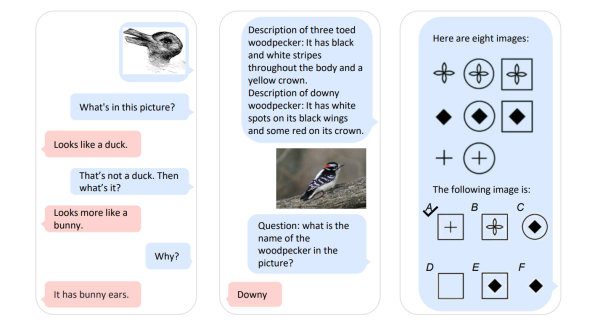

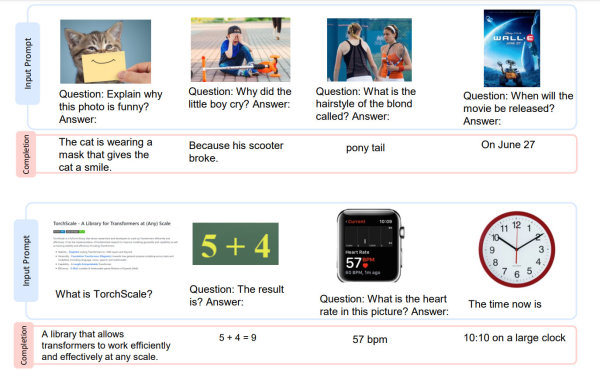

Heise was the first to report on Thursday that at the Microsoft event "AI in Focus - Digital Kickoff" it was mentioned almost in passing that Andreas Braun, CTO Microsoft Germany and Lead Data & AI STU, was even more specific: "We will present GPT-4 next week, there we have multimodal models that will offer completely different possibilities - for example videos". Unlike "large language models" (LLMs), multimodal models are not limited to speech for input and output. One can, but does not have to use text as input, but can also "input" an image, a sound or - according to Microsoft&s hint - even a video. Only a few days ago, Microsoft presented its own first large multimodal model  Kosmos-1 Kosmos-1 is NOT GPT-4 and only has in common that GPT-4 can also work multimodally.  Cosmos-1 So something similar could soon be possible for video input.... It is also to be expected that multimodal output will also be usable in the future. Whether this is already the case with GPT-4 will be clarified next week. In any case, we should soon see the convergence of GPT and Diffusion models. Incidentally, the managing director of Microsoft Germany, Marianne Janik, emphasised at the same event that AI is not about replacing jobs, but about doing repetitive tasks in a different way than before. Many people will continue to be needed as experts to make the use of AI value-adding. So you&d better start practising your prompting, dear people... deutsche Version dieser Seite: GPT-4 kommt schon schon nächste Woche: KI für Text, Bild- und Video |