[12:59 Thu,15.September 2022 by Thomas Richter] |

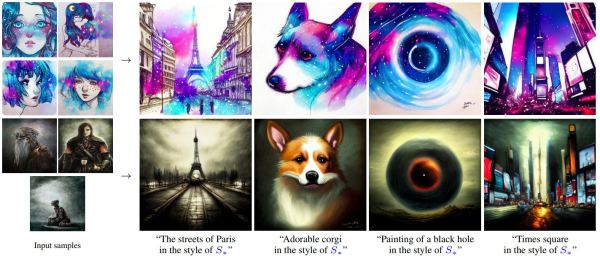

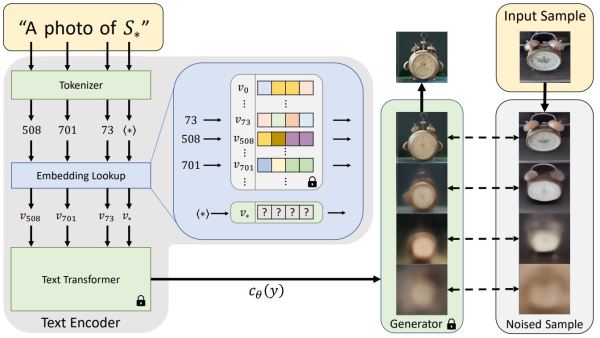

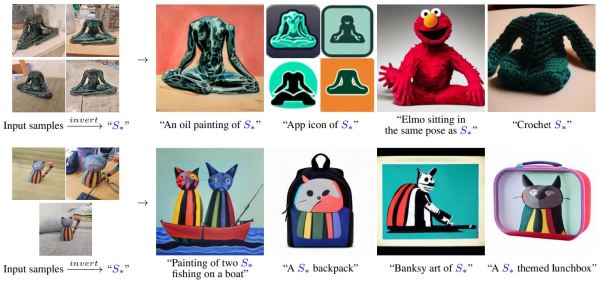

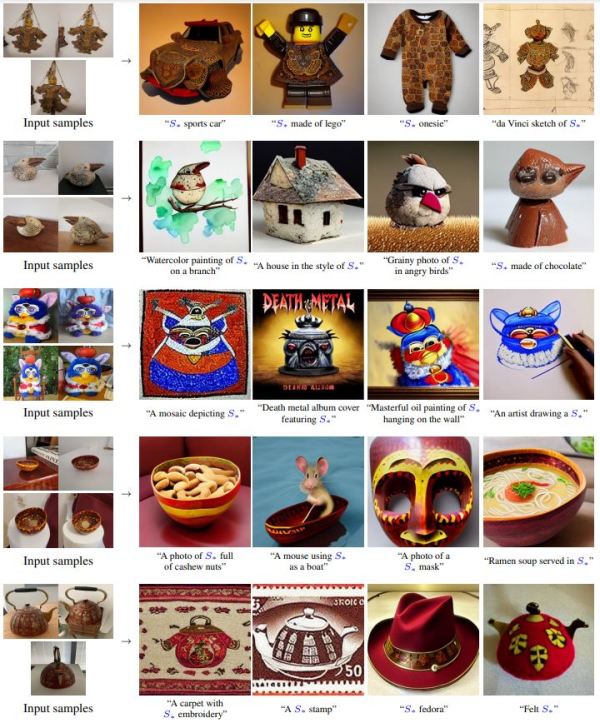

The development of text-based image generation via AI continues at a rapid pace and is yielding ever new practical functions. A team of researchers from Nvidia and Tel Aviv University has now presented a new algorithm that extends the existing functionality by the useful possibility of integrating one&s own objects into the image syntheses and thus using very specific objects (such as one&s own cat or car) in the syntheses.  To do this, the algorithm, which is called "Textual Inversion" (or "Personalized Text-to-Image Generation"), is given several images of the desired object (optimally 3-5) on different views and can be trained with them. Then this new training material is imported into the model of an image AI and can be called by a new word (quite analogous to a variable) and used in text input.  This can be used, for example, to easily manipulate a given image via text, such as to specifically change the color of an object, render it in a different environment or painting style, or even turn it into a marble statue or similar, and gives a taste of the possibilities of future object-based AI image editing (and soon video editing) via text. However, more abstract concepts such as a new abstract painting style (or look) can also be trained, which can then be used to give images a specific look. In the following example, the Textual Inversion algorithm is trained with a headless statue in cross-legged position - this can then be reproduced in other styles (e.g. as oil painting, icon or crochet figure).  And thanks to the very active community around the recently released open source  deutsche Version dieser Seite: Neuer Algorithmus ermöglicht eigene Objekte in KI-generierten Bildern |