[11:34 Fri,24.March 2023 by Thomas Richter] |

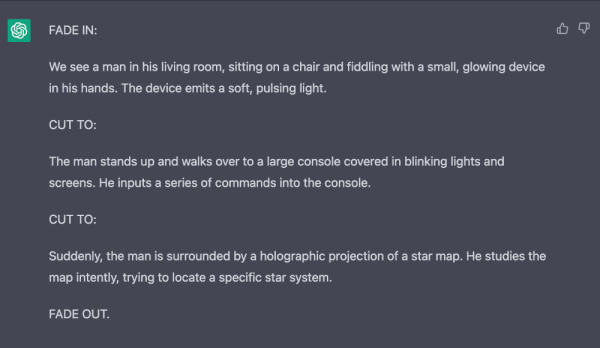

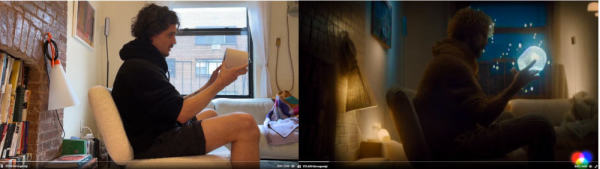

The following short experimental video by designer Nick St. Pierre nicely demonstrates how AI tools can be used in the field of video. He used several different AI tools to produce the clip, namely ChatGPT, the image AI Midjourney as well as the (so far only closed beta) accessible Nicely, he explains in a Twitter thread, which we will reproduce here, how he uses the different AIs in interaction. In the beginning, the "script" was developed via ChatGPT. The task was "Write a script for a 9-second video consisting of three 3-second clips. The story should feature a man in his living room and have a science fiction theme."  ChatGPT&s script. Then a reference image was generated using the image AI  The reference image of Midjourney In the next step, St Pierre then filmed very roughly recreated the scenes described in the script with his iPhone. To facilitate the style transfer, he tried to recreate the pose of the midjourney image. His own video acts as a reference for generating the AI video, similar to how image generation via stable diffusion uses a sample image via  Before and After This self-shot clip, along with the image from Midjourney, then served as the basis for transferring the image style to the entire video using the The reference video you shot yourself: The next step was just editing the three individual clips together in iMovie. Then all that was missing was the soundtrack - this was created by St Pierre with the help of the sound AI Of course, the small clip is just a proof-of-concept to show how different AI tools could be used in a real film production, if the quality of the AI generated results is good enough. Of course, in a real clip production, more time would be invested in fine-tuning the resulpts to achieve a consistent look.  Depending on the AI tool used, it will still take more or less time to get production-ready results right off the bat. But how fast the development of AI tools is progressing at the moment can be nicely seen by this comparison of the output of the different versions of the image AI Midjourney in only one year - the video AIs will probably improve similarly fast:  Midjourney Evolution Video generated entirely by AI.Very soon it will also be possible, for example via deutsche Version dieser Seite: Wie man einen Videoclip mit KI-Tools produziert - in nur 3 Stunden |