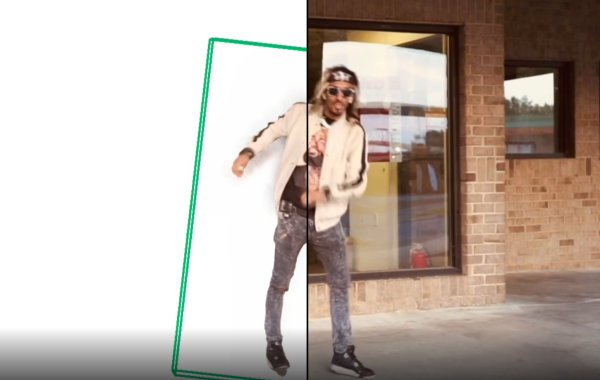

And once again an AI paper that you could actually foresee - and still marvel that such things are now simply possible. This time it's about being able to "estimate" dancing people from Youtube videos in every phase of their movement from all sides. Sounds cumbersome at first, so it's explained by example. After HumanNeRF has watched a video from the net, it can...

a) crop the dancing human in the video from an almost arbitrary background and

b) let the camera move arbitrarily around the generated human 3D object.

So the AI learns from a relatively arbitrary dance how the object looks from all sides and can thus also render to each frame its idea of how the object would look from a different angle. This goes so far that even the correct drape of the clothes is simulated.

In extreme cases, the AI model can even "invent" a plausible 180 degree view of the person from behind. In other words, a perspective in which no real pixel from the original clip can be seen. The astonishing thing about this algorithm is that a program can create a model of the 3D structure of people and clothes from the view of a single camera. And subsequently reproduces this model quite as an impressive 3D model.

The quality of the generated perspective change is very variable and of course extremely dependent on the training material. However, it is clearly above older AI methods and mostly at least on the level of a good video game 3D simulation. And thus already today certainly useful to move people in a virtual environment in the background or to let them blur in the bokeh.

In contrast to special 3D scans, HumanNeRF obtains its data only by looking at a movement. So there is no need for specific dancing. The body should only have been seen once from all sides. Fantastic Times....