[16:59 Fri,25.October 2024 by Thomas Richter] |

And once again, a promising new open-source video AI has been released: Mochi 1 is the name of the new SOTA (State-of-the-Art) video model from the startup Genmo, available under the free Apache 2.0 license along with weights  According to Genmo, Mochi 1 is set to outperform current top video AIs such as Runway Gen 3, Kling, or Lumas Dream Machine in terms of motion coherence and prompt interpretation. The current version is still a preview, limited to a resolution of 480p (640 x 480 pixels) with a (very high) frame rate of 30 fps and a duration of 5.4 seconds, but an HD version is expected later in the year.

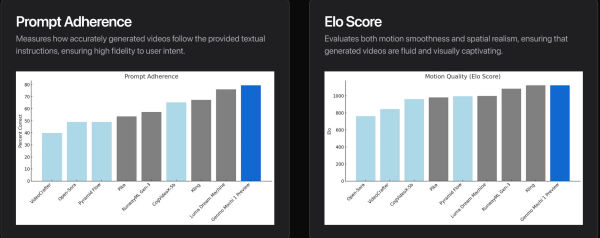

The new model, with 10 billion parameters in the new Asymmetric Diffusion Transformer (AsymmDiT) architecture, is particularly good at producing coherent and realistic depictions of motion, both for individual objects (like humans and animals) and for complex physical simulations, such as fluid dynamics, fur, or hair movement. Like all good video AIs, Mochi 1 takes a step toward becoming a world simulator capable of realistically depicting anything imaginable. The 30 fps frame rate, which is very high for a video AI, also ensures smooth motion representation. Another strength is its precise prompt interpretation, meaning how well Mochi 1 can translate a text prompt into moving images, considering the subject, background, and desired actions. Here’s a comparison with leading open-source and commercial (closed-source) video AIs in terms of motion coherence and prompt interpretation:  In principle, Mochi 1 requires 4 of Nvidia&s specialized Mochi 1 is definitely an interesting addition to the current open-source video AIs like Here deutsche Version dieser Seite: Genmo Mochi 1 - neue Open-Source Video-KI will mit Kling und Runway konkurrieren |