Samsung is also actively involved in AI application research (Samsung AI lab!) and has now published a very respectable project. Egor Zakharov has combined the already known deep learning models for face exchange with an adversarial network to make the results even more realistic. With visibly amazing results:

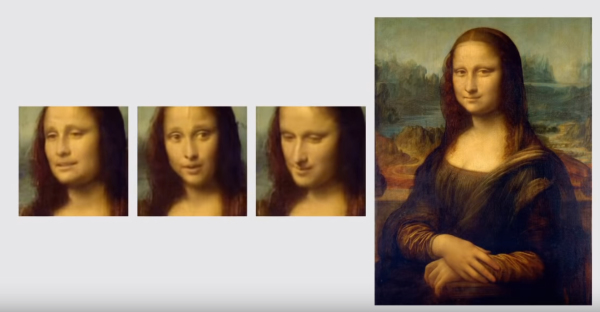

The new model can derive a quite plausible moving image from a single still image. It is almost frightening to revive personalities from the past (e.g. from a black and white photo). But also well-known works of art like the Mona Lisa can start to speak.

If you have several still images available for the training, the degree of reality of the exchange can be increased almost to perfection. A good comparison with current models (X2Face and Pix2pixHD) can be found at  the corresponding paper.

the corresponding paper.