[19:51 Mon,20.February 2023 by Thomas Richter] |

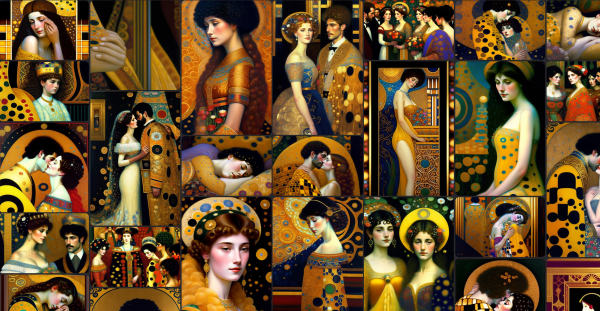

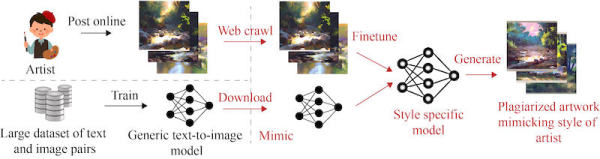

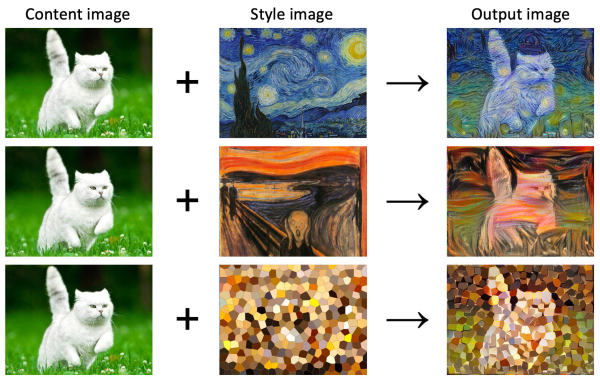

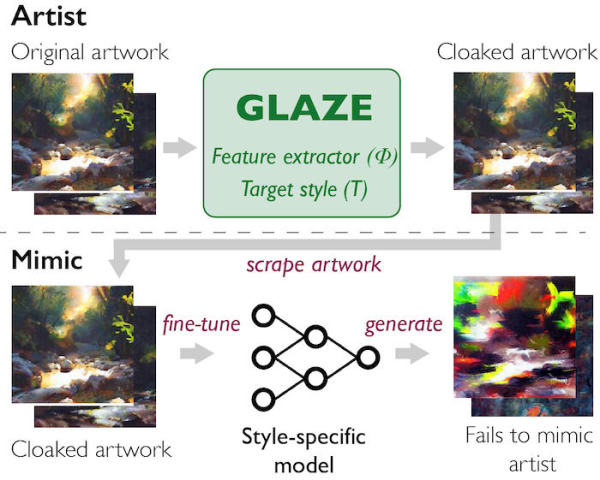

The boom in image-generating AIs that can produce any image per prompt has caused great anxiety among artists, since any style of art or artist - whether painting, photography or digital art - can often be impressively imitated by the AI. The AI can then produce images in the corresponding style in virtually unlimited numbers - an uncanny and overpowering competition for human artists.  Pictures generated in the style of Gustav Klimt Why can AIs imitate artists so well?The basis for image AIs is training material - billions of images including text descriptions downloaded from the web, such as the  An example of the LAION dataset. The artist community tries to fight back by Until now, however, artists were otherwise helpless and could not prevent their work from being used as training material for an image AI, thus making their very individual style easily imitable by prompt. No longer posting images on the web, or posting only low-resolution images, is not a real alternative for artists who rely on the public as potential buyers. Stable Diffusion has even accommodated artists by removing the ability to copy a particular artist&s style via name prompt in the latest version 2.0. However, the artist styles are still included in the model and can still often be tickled out indirectly by means of descriptive prompts.  How image AIs learn styles That&s why a team of computer scientists at the University of Chicago has developed a completely new way for artists to defend themselves against the unwanted training of their own images. The process, called Glaze, alters - almost imperceptibly to humans - the images posted on the web to make them "indigestible" to the training of image AIs, meaning that the AI is unable to reproduce the style of the Glaze-protected images. On the left, the original artwork by Karla Ortiz, the same artwork with the "low" cloaking setting, and the same artwork with the "high" cloaking setting:  Nearly invisible to humans. How does Glaze work?.To effectively camouflage an artist&s work, it is necessary to determine the key characteristics that make up his or her unique style. To accomplish this, the researchers came up with the idea of using AI against itself, so to speak. Style transfer algorithms, close relatives of generative AI models, take an existing image, such as a portrait, still life, or landscape, and render it in any desired style, such as cubist, watercolor, or the style of well-known artists such as Rembrandt or Van Gogh, without altering the content.  Style Transfer Examples from GO Data Driven Glaze uses such a Style Transfer algorithm to analyze a work of art, identifying the specific features that change when the image is transformed into a different style. Then Glaze changes those features just enough to fool the AI models mimicking the style, while leaving the original art virtually unchanged to the naked eye. Glaze thus takes advantage of the fact that image AIs "perceive" differently than humans. As can be seen in the sample images below, camouflaging using Glaze worked so well that after training with the camouflaged image, the image AI was no longer able to mimic the artist&s style. On the left, the original artwork by Karla Ortiz; in the middle, plagiarized artwork created by an AI model; and on the right, the image generated by the AI after camouflaging the original artwork:  The special twist: even if only a portion of an artist&s images are camouflaged with Glaze, and both protected and unprotected images are used to train an AI, the AI is unable to reproduce an artist&s style - it is effectively confused by the de-glazed images. Since new models of image AIs are also trained over and over again with new image material collected from the web, it is the hope of researchers and the artist community that the image AIs will thus "unlearn" even already learned artist styles. Glaze coming soon as a free tool.The researchers want to publish in the next weeks a free version of Glaze in the form of a free tool for Windows and macOS by means of it artists should be possible to camouflage their pictures, before so on-line are placed. Surveys of the researchers among artists indicate a large demand for such a tool, over 90% of the artists want to use Glaze in the future to protect their images online.  Model of Glaze However, the researchers cannot assure that Glaze will continue to work in the future, because in a kind of arms race, the other side - i.e. the companies behind the image AIs - could also adapt to the protection measures and circumvent them. They therefore point out that Glaze is only a temporary solution that offers some protection, but that permanent protection of artists and their works can only succeed through regulatory measures. deutsche Version dieser Seite: Künstler vs KIs: Neues Tool macht Kunstwerke für KIs unverdaulich |