[08:35 Wed,7.October 2020 by Rudi Schmidts] |

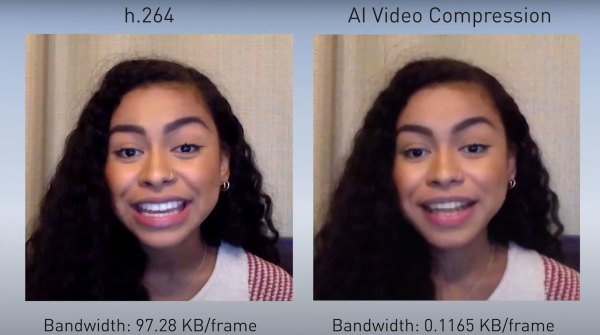

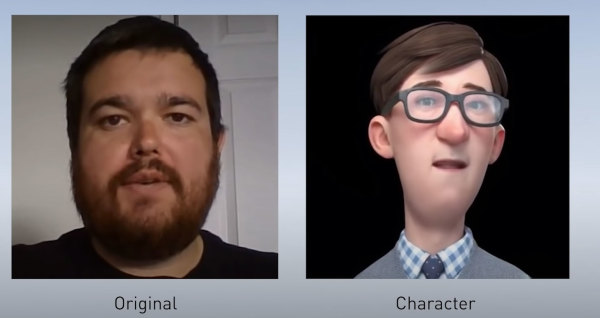

This is not really surprising: Nvidia has introduced a new AI application to significantly reduce the data rate during streaming. Under the keyword AI video compression, Nvidia reduces the transmission of streaming data to key points on the face, such as the position of the eyes or mouth opening. From this data plus a previously transmitted reference image, a GAN (Generative Adversarial Network) then calculates a plausible representation of a speaking actor. And this with flowing movements.  As you can clearly see in the upper picture, the image of the AI compression does not correspond to the really recorded image, but is "only" a plausible prognosis of how the original might look like at this point in time on the other side of the transmitter. Since only primary motion/position data is transmitted, it is also possible with this technology to turn the head interactively and let the user look in a different direction. In the case shown here, a direct alignment with a view into the camera is achieved from a lateral viewing direction. At the latest when a user holds an object into the camera or the face is covered for a short time, the algorithm might have a problem. Thus, the whole thing will probably only be useful for pure "Talking Heads" applications, where the personal nuances of the other person are rather irrelevant. Nvidia will also present a special application case that we believe has much greater potential for success: The replacement of the speaker by a credibly animated character.  This could really blow a breath of fresh air into many boring video conferences... deutsche Version dieser Seite: Nvidia AI Video Compression spart Streaming-Datenrate mit künstlicher Intelligenz |