[11:44 Tue,8.August 2023 by Rudi Schmidts] |

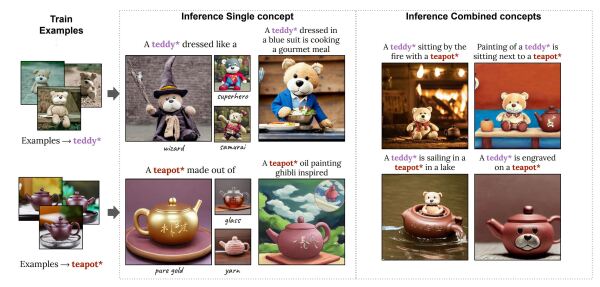

And yet another major advance in generative AI research that Nvidia wants to and will officially present at Siggraph 2023: "Perfusion" is the name of a new text-to-image (T2I) individualization method that is supposed to make it particularly easy to "train" your own people and objects into an AI image generator. According to Nvidia&s  Nvidia Perfusion The application is also very simple. You just present some photos to the net and supply a text prompt describing who or what is to be seen in the pictures, directly followed by an asterisk (*). This term with the star can then be easily used in the diffusion model with the other prompt words to describe the image. It should even be possible to "train" multiple objects in this way. The key innovation in Perfusion is called "key-locking." In this approach, new concepts desired by the user, such as a particular cat or chair, are linked to a broader category during image generation. For example, the cat is linked to the general idea of a "cat." This technique allows for more precise matching, taking into account the specificity of the added trained objects in the representation of the general category. Thus, it can be assumed that, as a consequence, all cats will strongly resemble the added trained cat. What could complicate a training of several different cats or persons. However, the broad, local application will be opposed by the required GPU memory size of 27GB, despite a timely release of the code. This is because Nvidia&s largest consumer GPUs currently only ship with a maximum of 24GB, which is just too small to try out Perfusion. We had last pointed out exactly such upcoming problems in a deutsche Version dieser Seite: Nvidia Perfusion - Personen und Objekte in KI-Modelle einfach einbringen |