Is it Christmas already? We've waited and doubted for years whether we'd ever experience this (which unfortunately didn't work out for Emperor Franz): Nvidia is bringing true 10-bit 4:2:2 hardware decoding with the new Geforce RTX 50 series!

And it was about time, because besides RAW, 10-bit 4:2:2 Log recording is the most efficient format for recording video footage with modern cameras for professional post-processing. Apple has supported these formats in hardware decoding for years, but on PCs under Windows this was only possible with Intel CPUs until now. Those who only had AMD or Nvidia components in their computer had to decode these codecs in software – which consumes unnecessary computing power and often results in jerky playback on the timeline.

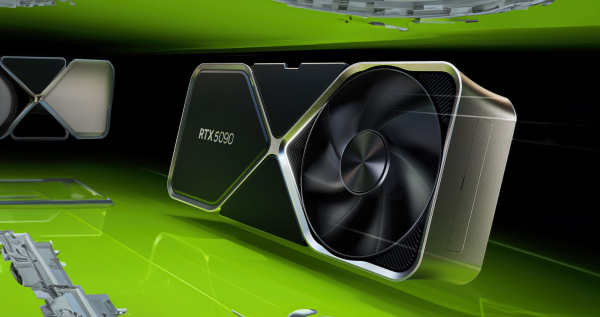

Nvidia's new RTX 50 GPUs finally support 10-bit 4:2:2 decoding

Incidentally, Nvidia refers to this as "Pro-Grade 4:2:2 Color". A single hardware decoding unit of the latest, sixth generation can now decode up to eight 4K streams at 60 fps. Furthermore, the RTX 5090 and RTX 5080 are equipped with two decoders. The RTX 5070 (and the Ti model) will only ship with one decoding unit.

The encoder units have also been upgraded. The RTX 5090 now has three, the RTX 5080 and RTX 5070 Ti two, and only the currently cheapest RTX 5070 (without Ti) will have a single encoder.

But even with only one encoder and one decoder, the currently smallest RTX 5070 with a suggested retail price of €649 should be a very interesting choice for budget video editing systems. With around 30 FP32 TFLOPS and 672 GB/s memory bandwidth, it already delivers similar performance to the currently top-of-the-line Mac Studio Ultra configuration. Of course, without the advantages of unified memory.

Since NVDEC is directly supported in all current editing programs, Nvidia is now neutralizing one of two major Apple advantages in video editing. And who knows, maybe  Project DIGITS in May will also make Apple's unified memory competition for mini Resolve workstations.

Project DIGITS in May will also make Apple's unified memory competition for mini Resolve workstations.