[15:50 Mon,16.September 2024 by Thomas Richter] |

As the first of the current video AIs, Runway has just released a SOTA (State-of-the-Art) video-to-video model, which enables the ability to change the style or specifically the content of one&s own video via AI using a prompt. What&s striking is the ability to precisely control the content, image composition, and desired movements of the camera or objects using the provided video, something that was not as accurately possible with just a text or image prompt before (although the option for video-to-video has existed since the first generation of Runway in early 2023, the quality has only now become good enough and up to current standards).  Runway Gen-3 Alpha Video-to-Video is now available for all paying users. To use it, simply upload your own video and describe via a prompt what should be aesthetically changed about it. Alternatively, one of the pre-defined styles can be selected, in which the video will then be newly rendered. According to early reports from users, the generation process is said to be quite fast. Runway&s own demos and the first clips on social media already look quite promising and showcase the enormous potential of the new video-to-video mode.

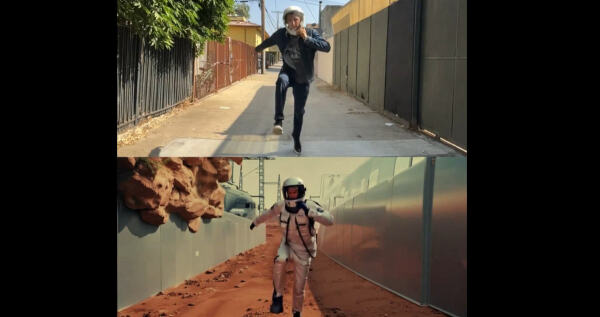

VFX via AIThe new model now offers filmmakers a simple way to use complex VFX in their videos without the specialized knowledge that was otherwise necessary. This allows an object or person to be easily swapped with another, their appearance to be specifically altered, or even the entire setting or style of the clip to be changed. For example, an everyday scene (including wardrobe, props, and surroundings) can be transformed into a medieval or cyberpunk setting.  Similarly, the season or lighting mood can also be easily adjusted, or a live-action film can be transformed into an animated film in any desired style. Every actor can also be turned into any other character with the help of Gen-3, while retaining their facial expressions and movements. This also simplifies the otherwise complicated synchronization of lip movements of virtual characters to spoken words. Here are some more examples:

Of course, new legal issues will also be raised - who owns a newly rendered and heavily altered clip based on someone else&s video, as demonstrated here, for example, using two scenes from "300":

deutsche Version dieser Seite: Runway Gen-3 Alpha Video-zu-Video - Spezialeffekte für jedermann im Handumdrehen |