[17:13 Fri,26.July 2024 by blip] |

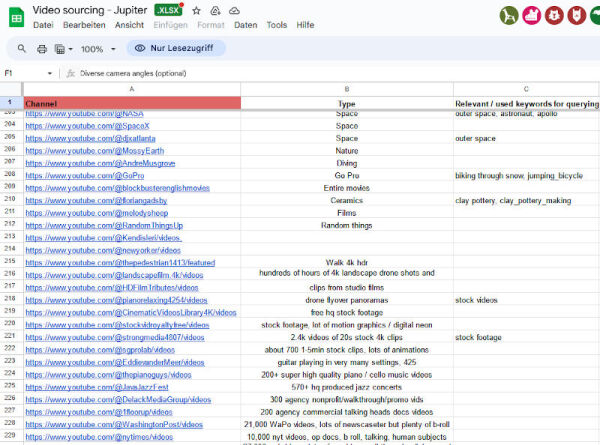

AI video models are getting better and better, but the quality of their output depends heavily on the video material they use to learn what videos should look like. So what are they trained on? All AI providers (except Adobe) are keeping a low profile on this question - OpenAI&s CTO Mira Murati, for example, said in an interview that they used freely available or licensed material from  As  Video sourcing table Not only must a video model be able to generate convincing images, but a prerequisite for this is also that it “understands” what is to be calculated in each case. Image descriptions are extremely important for this, from which the model learns links between images and words. This is the only way to obtain a corresponding video output via text input (prompts). In addition to official media such as NYTimes, Vevo, Disney, arte and the like, the listed channels also include other big names such as NASA or TED, manufacturers such as GoPro as well as well-known and unknown YouTube creators. The videos contained in the list are said to have been downloaded from YouTube via a crawler using a proxy, bypassing the portal&s security measures (as downloading is not permitted under the terms of use). Of course, it is not possible to directly prove that every video listed was actually used for the training. However, the journalists at 404 managed to generate random samples of Runway Gen-3 clips in the style of some of the listed YouTubers for their article, which suggests that their videos are included in the current corpus. Specifically, their names were also entered - since then this possibility has apparently been prevented by Runway, but according to 404 there has been no direct comment on the allegation. As mentioned at the beginning, it has long been suspected that YouTube clips are used to train video AIs - to our knowledge, there has never been such concrete proof as in this case. We are looking forward to the (legal) aftermath - Incidentally, when Runway Gen-1 www.slashcam.de/news/single/Runway-Gen1--Neue-Video-KI-stilisiert-Videos--mask-17715.html was shown for the first time in February 2023, the motto was “No lights. No camera. All action.” This leak now illustrates quite vividly that it is of course not possible to do without lights and cameras after all. deutsche Version dieser Seite: Runway Gen-3 Video-KI wurde an tausenden, ausgesuchten YouTube-Videos trainiert |