[11:55 Fri,1.October 2021 by Rudi Schmidts] |

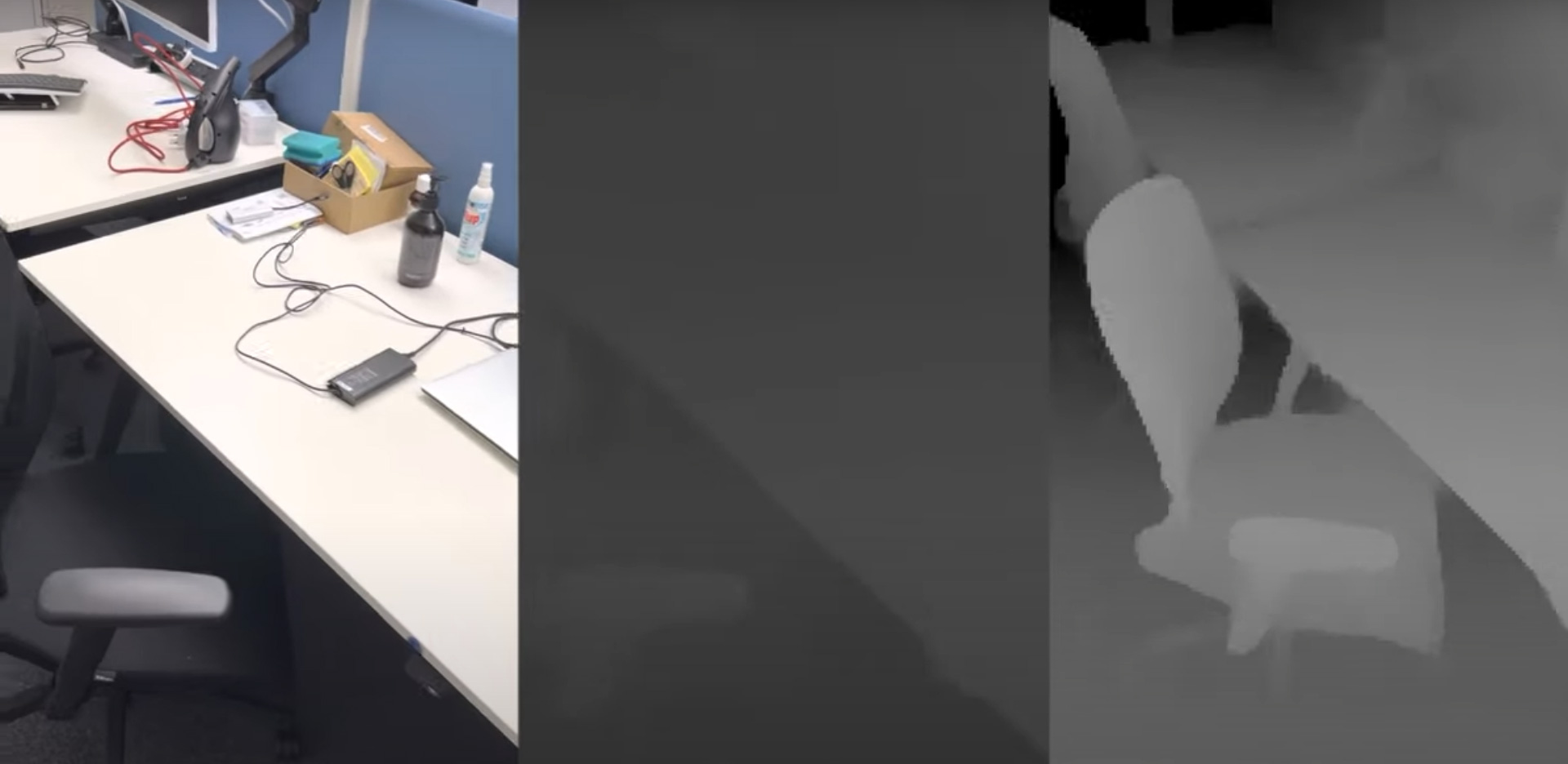

Thus, it is clear what has long been suspected: The Iphone records with maximum depth of field and then lets areas disappear through a depth mask in post-production in synthetic re-sharpening. A video by Jan Kaiser shows both streams in parallel on Youtube: As you can clearly see, the new depth mask of the iPhone 13 is much softer than the depth masks of the iPhone 12, which were created with a Lidar sensor. The former is extremely important for soft (and believable) bokeh edges. Second, it is clearly visible that the depth mask is almost only effective up close. This is where the cropping possibilities from a synthetic bokeh ergo are greatest. With an open implementation as a second data stream in a common file format, the door is now open for providers of other editing and compositing programs. And at the same time, we dare to bet that other camera manufacturers will also include depth information in a similar way in their camera streams in the near future. And this, in turn, will give cameras with small sensors another big piece of the pie of large-sensor cameras. After all, if bokeh can be simulated cleanly (to which AI algorithms in post-production will contribute another big piece), the last major advantage left for large sensor areas is dynamic range. And even in this area, we believe we can already spot something new on the horizon in the field of computational imaging. But that will probably still take a little while... deutsche Version dieser Seite: So funktioniert der iPhone 13 Cinematic Mode |

Until now, quite little was known about how Apple exactly calculates its synthetic bokeh into the recordings of the new Cinematic Mode. However, Jan Kaiser on Twitter has now found a simple way to extract the depth image recording of the new format.

Until now, quite little was known about how Apple exactly calculates its synthetic bokeh into the recordings of the new Cinematic Mode. However, Jan Kaiser on Twitter has now found a simple way to extract the depth image recording of the new format.