[11:28 Thu,22.September 2022 by Thomas Richter] |

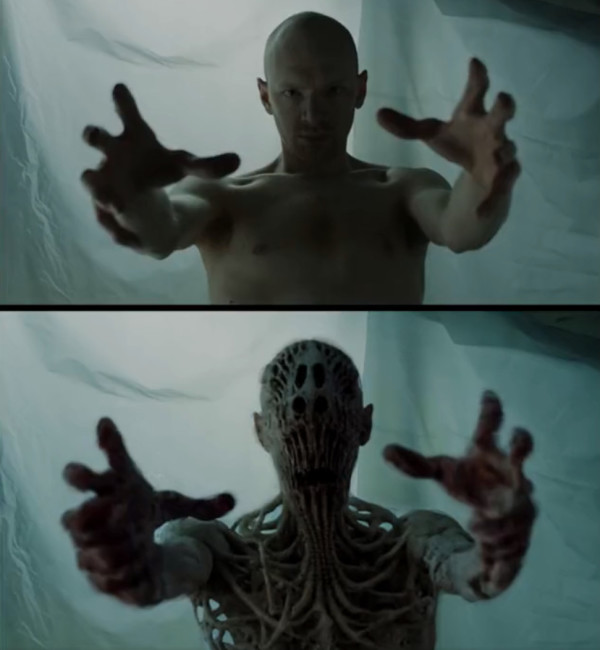

Alternative titles:  Filmmaker and musician Each was based on a short video of himself scaled to 1,024 x 1,024. With the help of Stable Diffusion per  Even more blatant is his following The horror motif is particularly suitable for these demos because dynamic artifacts created by an imperfect style transfer via Ebsynth, which would otherwise be distracting, tend to enhance the shock effect here. The classic workflow would have been a lot more complicated: first the speaker&s face would have to be read in as a 3D model via face capturing, then a 3D model of the new face would have to be created using a 3D modeling program, which would then be mapped onto the original face and rendered. All of this requires a lot of specialized knowledge in addition to the right tools.  In contrast, using the simple AI workflow and relatively easy-to-use tools, very different alienated versions of the original video were created in a short time - a small foretaste of the extensive possibilities of the currently explosively developing AI tools in the field of image - and also video. The whole thing is just a small demonstration of what effects can be created by combining multiple AI tools - before there are even any AIs specialized entirely in video that can freely create or edit entire videos on demand using only text description - much like Stable Diffusion or DALL-E2 can do with images right now. If such tools come in the future and the resolution and image quality are improved even further, then after the revolution in filmmaking, which has so far been driven primarily by hardware, the next stage will be ignited with the help of AI tools by making professional and yet easy-to-create special effects (and also animations) accessible to a broad mass. deutsche Version dieser Seite: VFX leicht gemacht: mit KI Gesichter animieren und Stimmen synthetisieren |