[16:05 Thu,20.May 2021 by Thomas Richter] |

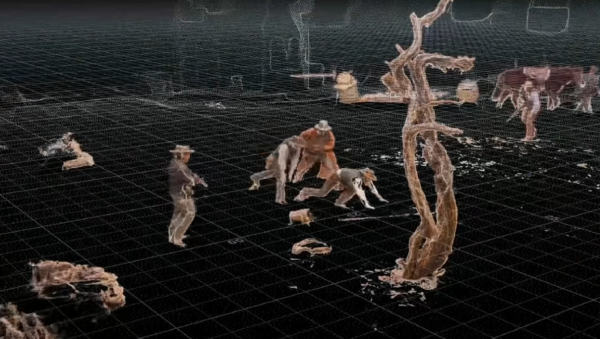

The seven major companies Verizon, ZEISS, RED Digital Cinema, Unity, Intel, NVIDIA and Canon have founded the Volumetric Format Association (VFA), the first industry association to create standards for a volumetric video ecosystem and make it known to a wider public in the first place.  VFA Four working groups are tasked with developing specifications for volumetric data acquisition, exchange of that data, decoding and rendering, and metadata. What is volumetric video anyway?Volumetric video can be understood as a kind of holographic video of a scene or object, which then allows virtual camera movements through it. Nowadays, this is created in specialized studios, which are equipped with a large number of cameras (and possibly other sensors such as lidar), which are arranged in a kind of dome around the actual scene.  Videometric dome with many cameras A scene is then recorded synchronously from their point of view, resulting in massive data rates of up to 600 GB per minute. From these clips, moving point clouds can then be calculated, which can be thought of as a calculated 3D world from the filmed scene or classical pixels to which additional spatial information is assigned (= voxels). Afterwards, one can move around in this scene with a virtual camera, moving almost completely freely in space, and thus define almost any camera position in post-production.  Recordings of the different cameras The software packages used in this process are still hand-crafted, which is why it is not possible to edit the recordings in common editing programs outside the studios themselves. Here, everyone (including Microsoft) still works with their own proprietary solutions - standardization by the VFA would therefore be a first important step to make working with volumetric video easier.  Volumetric video - what for?The volumetric point cloud/video data can be used for classic 2D video (which is then, however, freed from the fixed camera position) as well as for 3D video and, in the future, also for holographic video. For example, at sporting events or concerts, the user could decide for himself from where he wants to watch the action or even control his virtual camera himself. This could offer the user - especially via 3D - a previously unknown immersion through interactivity - both in fictional and real scenarios, which was previously only possible in (VR) computer games. Depending on the technology used to view these videos (2D monitor, 3D monitor, For filmmakers, volumetric video opens up new possibilities - for example, the now virtual camera can perform camera movements previously impossible only in CGI scenes. The director is completely free to decide which part of the scene should be shown from which perspective. The following clip nicely explains and demonstrates the new possibilities thus created by volumetric video: Volumetric video via AIA kind of democratization of volumetric video, i.e. a reduction of the so far enormously high hardware effort For example, Generative Query Networks (GQNs) can already generate a full 3D model from just a handful of 2D snapshots of a scene through a kind of three-dimensional "understanding" of the scene. The system can then imagine the scene from any angle and also reproduce it. It&s also worth noting that the system relies solely on input from its own image sensors, and it learns autonomously without human supervision. Thus, it could interpret models (e.g., a scene in an editing program) as 3D space without human intervention. Such GQNs still have narrow limits, but these are being extended with each new generation. deutsche Version dieser Seite: Zeiss, Red, Nvidia, Intel und Canon pushen volumetrisches Video - kommt die virtuelle Kamera für alle? |